Sunday, March 25, 2018

CQRS Commands, stateless services, and thin clients

Looking at the CQRS architectural pattern, there are interactions that maybe I didn’t fully understand. Like if the target of queries/commands are the Managers from the IDesign methodology, this kinda has a direct implication on the statefulness of the Manager vs the statelessness of the client. So you need a thin client with little state, and commands are routed to the Manager in the backend, and any updates then come back to the thin client as a response object or update message.

Tuesday, March 06, 2018

Growing out of a legacy application

Trying to grow out of a dated legacy application is no small feat, and there is undoubtedly a cottage industry dedicated to consulting on how to do just that.

The most effective pattern I've encountered for this is the Strangler Application, so named from some kind of tree seen down under. But it seems that there are better and worse ways of approaching a Strangler application.

I think Fowler's captures the key aspects: a proper Strangler application needs both a static data strategy ("asset capture") and a dynamic behavior strategy ("event interception"). And it is important that both parts need to be involved in a two-way strategy for getting data and events back and forth. Fowler stresses the strategic importance of this two-way strategy, as it creates flexibility for determining the order of asset capture. Words to live by...

The most effective pattern I've encountered for this is the Strangler Application, so named from some kind of tree seen down under. But it seems that there are better and worse ways of approaching a Strangler application.

I think Fowler's captures the key aspects: a proper Strangler application needs both a static data strategy ("asset capture") and a dynamic behavior strategy ("event interception"). And it is important that both parts need to be involved in a two-way strategy for getting data and events back and forth. Fowler stresses the strategic importance of this two-way strategy, as it creates flexibility for determining the order of asset capture. Words to live by...

Monday, March 05, 2018

<retro> What is Image Quality? </retro>

I saw a talk at AAPM 2015 by Kyle Myers (from the FDA's Office of Science and Engineering Laboratories) about the Channelized Hotelling Observer (Barrett et al 1993), which is a parametric model of visual performance based on spatial frequency selective channels in areas V1 and V2.

She was discussing the use of the CHO model for selecting optimal CBCT reconstruction parameters, but I ran across an obvious application of the technique to the problem of JPEG 2000 parameter selection (Zhang et al 2004).

It reminded me of the RDP plugin prototype I had developed a few years back, which demonstrated the ability to dynamically adjust compression performance during medical image review on an RDP or Citrix session. My prototype could also benefit from optimizing the CHO confidence interval (though it used JPEG XR instead of JPEG 2000), but possibly more interesting is the ability to predict the presentation parameters to be delivered over the channel, such as zoom/pan, window/level, or other radiometric filters to be applied to the image. It could also be used to determine when certain regions of the parameter space are not feasible for image review, because the confidence interval collapses (for instance, if you set window width=1).

So if anyone asks "What is Image Quality", you can tell them: "Image Quality = Maximized CHO Confidence Interval" (or maximized CHO Area Under Curve). Got it?

P.S. there is very nice Matlab implementation of the CHO model in a package called IQModelo, with some examples of calculating CI and AUC.

Friday, March 02, 2018

Better Image Review Workflows Part III: Analysis

The central purpose of reviewing images is to extract meaning from the images and then base a decision on this meaning. Currently the human visual system is the only system that is known to be able to extract all the meaning from a medical image, and even then it may need some help.

As algorithms become better at meaning extraction, its important to keep in mind that anything an algorithm can make of an image should, in theory, be able to also be seen by a human, even if the human needs a little help. This principle can unify the problem of optimal presentation and computer-assisted interpretation, as it provides a method of tuning an algorithm so as to optimize presentation. I went in an little more detail when I wrote about the FDA's Image Quality research.

As algorithms become better at meaning extraction, its important to keep in mind that anything an algorithm can make of an image should, in theory, be able to also be seen by a human, even if the human needs a little help. This principle can unify the problem of optimal presentation and computer-assisted interpretation, as it provides a method of tuning an algorithm so as to optimize presentation. I went in an little more detail when I wrote about the FDA's Image Quality research.

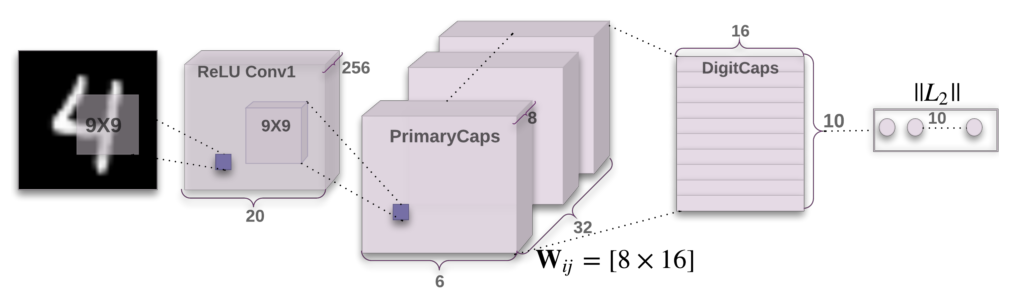

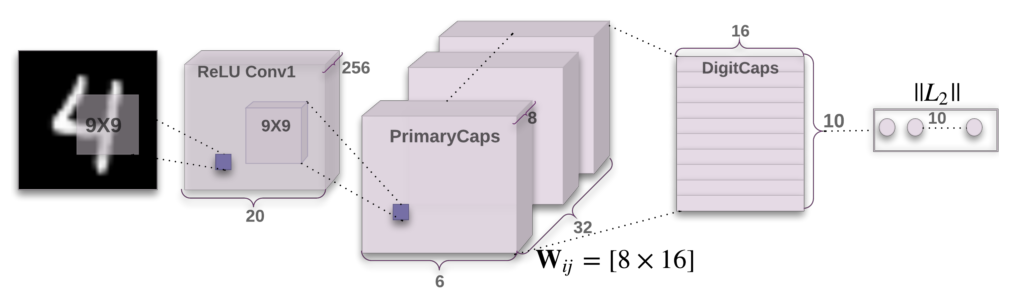

Capsules and Cortical Columns

I'm still trying to figure out what to make of the Capsule routing mechanism proposed by Sabour et al late last year. It is potentially a move toward greater biological plausibility, if the claim is that each Capsule corresponds to a cortical column. If the Capsule network does reflect what happens in the visual system, its routing algorithm would need to either develop or learned.

My point of reference here is the Shifter circuit (Olshausen 1993), which never explained how its control weights would have been "learned", despite other biologically plausible characteristics. I've always understood that learning control weights is more difficult than learning filter taps for a convolutional network, or RBM weights. I guess that's why reinforcement learning is so much harder to get right than CNN learning. But I never really thought the Shifter control weights would be learned anyway--I expected they would be the result of developmental processes, just as topographic mappings come from development.

The paper on training the Capsule network doesn't mention consideration of an explicit topographic constraint (like in the Topographic ICA). I wonder how difficult it would be to use a dynamic Self-Organizing Map as a means of organizing the units in the PrimaryCaps layer? Would this simplify the dynamic routing algorithm?

LoFi Ascii Pixel

I'm looking at some 'lofi' image processing in F#, and thought I would begin by publishing as gists (because its very light weight). I started with a lofi ascii pixel output, so you can roughly visualize an image after you generate it. I will include some test distributions (gabor, non-cartesian stimuli) in a subsequent post.

Monday, January 29, 2018

Teramedica Zero-Footprint Viewer

- Server-side rendering is probably the easier option for an organization with limited technical resources. The delays are noticeable in the video, though I guess for an initial "Enterprise Imaging" offering it is probably good enough.

- Web-browser based review is also key to zero footprint viewing

- One could argue that installing an app doesn't really count as "zero footprint", I guess it does minimize IT resources for deployment

- Ability to deploy on a luminance-controlled monitor (i.e. in a radiology reading room) is probably also essential--I'm not sure Teramedica has that covered yet

- How would this compare to a fat client running on a Citrix farm? Probably somewhat better performance (due to lossy compression, which I guess radiologists are OK with?) but the Citrix farm's scalability would likely not be favorable, especially if OpenGL volume textures were being used for server-side rendering

Monday, August 14, 2017

Better Image Review Workflows Part II: DICOM Spatial Registrations

I remember meeting with our colleagues at Varian's Imaging Lab a few years back, to discuss (among other things) better ways of using DICOM Spatial Registration Objects (SROs) to encode radiotherapy setup corrections. They had been considering support for some advanced features, like 4D CBCT and dynamic DRR generation.

It was clear that having a reliable DICOM RT Plan Instance UID and referenced Patient Setup module would be immensely useful in interpreting the setup corrections. But where would this information be stored? As private attributes in the CBCT slices? Or in the SRO itself? The treatment record contains this relationship for each treatment field, but there is no way of encoding a reference to a standalone setup image within the treatment record (or so I've been told)

Siemens has, for years now, used a DICOM Structured Report to encode offset values, in lieu of an SRO, at least for portal images. I think skipping the SRO entirely was a mistake, but when I look at the state of RT SROs today, it seems in retrospect that a semantically meaningful Structured Report to pull together the other objects (SRO, treatment record, CBCT slices) would be quite an advanced over current practice. Looking at some of the defined IODs for structured reports:

- Mammography CAD Structured Report

- Chest CAD Structured Report

- Acquisition Context Structured Report

I've started (again) reading David Clunie's Structured Report book, in hopes of gaining more insight in what can (or should not) be encoded using SRs. It seems like a quite powerful capability--I'm wondering why the RT domain has not made more use of it...

Better Image Review Workflows Part I: Zombie Apocalypse

I was reading a bit on the developing RayCare OIS, and wondered why an optimization algorithm company would have an interest in developing an enterprise oncology informatics system.

It doesn't take much to realize that oncology informatics is rife with opportunities for applying optimization expertise. Analysis of the flood of data is only the most obvious benefit from expertise in machine learning and statistical inference. But even the bread and butter of oncology informatics--managing daily workflows and data--could benefit from this expertise.

For the data generated by daily workflows to be meaningfully used, the workflows (and supporting infrastructure) must be efficient. Such an infrastructure needs to support workflows involving various actors (both people and systems), as well as a consistent data model shared by the actors to represent the entities participating in the workflows.

The DICOM Information Model for radiotherapy provides a relatively consistent, if somewhat incomplete (yet), model of how data is produced and consumed during RT treatment. Such a data model can be used to help streamline workflows, as a semantically consistent data stream can be used as the input to the fabled prefetcher (see Clunie's zombie apocalypse scenario), in order to ensure the right data is available at the right time. And. if we know enough about the workflows (i.e. what processing needs to be done; what areas of interest are to be reviewed), then we can even prepare this before hand, so when the reviewer picks the next item from the worklist, it is all ready to go.

So if RayCare's OIS can make use of some optimization expertise to build a better autonomous prefetch algorithm (and the associated pre-processing and softcopy presentation state determination algorithms), this would probably be a pretty nifty trick.

But getting all the sources of data to fit in to the DICOM information model, or (even better) fixing the DICOM information model to make it more suited for purpose, seems to be the big hiccup here. Maybe having RayCare also working on the problem, things will begin to advance. That's what free markets do, and we all want free markets, right?

It doesn't take much to realize that oncology informatics is rife with opportunities for applying optimization expertise. Analysis of the flood of data is only the most obvious benefit from expertise in machine learning and statistical inference. But even the bread and butter of oncology informatics--managing daily workflows and data--could benefit from this expertise.

For the data generated by daily workflows to be meaningfully used, the workflows (and supporting infrastructure) must be efficient. Such an infrastructure needs to support workflows involving various actors (both people and systems), as well as a consistent data model shared by the actors to represent the entities participating in the workflows.

The DICOM Information Model for radiotherapy provides a relatively consistent, if somewhat incomplete (yet), model of how data is produced and consumed during RT treatment. Such a data model can be used to help streamline workflows, as a semantically consistent data stream can be used as the input to the fabled prefetcher (see Clunie's zombie apocalypse scenario), in order to ensure the right data is available at the right time. And. if we know enough about the workflows (i.e. what processing needs to be done; what areas of interest are to be reviewed), then we can even prepare this before hand, so when the reviewer picks the next item from the worklist, it is all ready to go.

So if RayCare's OIS can make use of some optimization expertise to build a better autonomous prefetch algorithm (and the associated pre-processing and softcopy presentation state determination algorithms), this would probably be a pretty nifty trick.

But getting all the sources of data to fit in to the DICOM information model, or (even better) fixing the DICOM information model to make it more suited for purpose, seems to be the big hiccup here. Maybe having RayCare also working on the problem, things will begin to advance. That's what free markets do, and we all want free markets, right?

Friday, March 17, 2017

Better Image Review User Experience

In my previous post, I mentioned three aspects of image review that can be improved. The first of these is improvements in the user experience during image review.

The increased use of image-guidance during the course of treatment represents a significant source of knowledge about the delivery, but also an additional burden on daily routines. The subjective tedium of working through a list of images can be lightened by enhancing aspects of the review user experience.

Usability concerns that can be addressed include:

A number of architectural patterns are used to support these techniques. Examples of these patterns are contained in the PheonixRt.Mvvm prototype.

The increased use of image-guidance during the course of treatment represents a significant source of knowledge about the delivery, but also an additional burden on daily routines. The subjective tedium of working through a list of images can be lightened by enhancing aspects of the review user experience.

Usability concerns that can be addressed include:

- Color schemes that are better adjusted for visual analysis, for instance with darker colors

- Animated transitions to facilitate visual parsing of state changes, such as pop-up toolbars and changes in image selection

- Use of spatial layout to convey semantic relationships, as in thumbnail and carousel presentations of images in temporal succession

- Progressive rendering and dynamic resizing of image elements, to minimize variations in the availability of network resources

A number of architectural patterns are used to support these techniques. Examples of these patterns are contained in the PheonixRt.Mvvm prototype.

Sunday, April 24, 2016

How to make image review better?

Image review is critical to modern IGRT, and will be equally important for adaptive RT. Yet today most users complain that it is a painful chore. So how do we make image review better?

I think three areas are critical:

I think three areas are critical:

- A better image review user experience

- Better workflows to support image review

- Advanced analysis tools to assist in the review process

I'll post my thoughts on areas of improvement for each of these.

Sunday, August 30, 2015

MVVM and SOA for scientific visualization

I've been working on an architecture that combines the simplicity of MVVM for UI interactions with the scalability, pluggability, and distributedness of SOA for processing data for visualization. The first prototype I've implemented is in the form of the PheonixRt.Mvvm application (on github at https://github.com/dg1an3/PheonixRt.Mvvm)

PheonixRt.Mvvm is split in to two exe's:

can be exposed as bindable properties on the ViewModel, and then any additional rendering can be done by the View (for instance to add adornments, or other rendering styles). So the services are necessary to turn the data in to these kinds of primitives, and WPF will take it from there.

This prototype also looks at the use of the standy pool as a means of caching large amounts of data to be ready for loading. This is similar to what the Windows SuperFetch feature does for DLLs, but in this case it is large volumetric data being pre-cached.

PheonixRt.Mvvm is split in to two exe's:

- Front-end

- UI containing the MVVM

- Interaction with back-end services is via service helpers (using standard .NET events)

- Back-end

- hosts the services responsible for pre-processing data

- hosts services that visualize the data, such as calculating an MPR, a mesh intersection, or an isosurface

- Bitmap (including alpha values)

- 2D vector geometry, such as a line, polyline, or polygon

- 2D transformation

can be exposed as bindable properties on the ViewModel, and then any additional rendering can be done by the View (for instance to add adornments, or other rendering styles). So the services are necessary to turn the data in to these kinds of primitives, and WPF will take it from there.

This prototype also looks at the use of the standy pool as a means of caching large amounts of data to be ready for loading. This is similar to what the Windows SuperFetch feature does for DLLs, but in this case it is large volumetric data being pre-cached.

Sunday, May 17, 2015

Crowd Sourcing and Anonymous Science

Maybe the future of science will be in the form of crowd-sourced anonymous discussions, which will be used to formulate testable hypotheses and experimental design. Then publicly funded labs would pick up the work, perform the experiments, and publish the results. The results would then be discussed by the anonymous crowds.

The anonymity is crucial, because it would act as an objectivity filter. The mechanism of anonymity would specifically act to prevent the formation of visible "personas", because comments would not be traceable to a known identity. So over time the discussions would assume the form of a "collective mind" debating with itself.

The anonymity is crucial, because it would act as an objectivity filter. The mechanism of anonymity would specifically act to prevent the formation of visible "personas", because comments would not be traceable to a known identity. So over time the discussions would assume the form of a "collective mind" debating with itself.

Friday, March 27, 2015

word2vec

I've been able to analyze some notes using word2vec, and the extracted "meaning" has some interesting properties. Maybe next I'll run some archived comments from the Mosaiq Users listserv and see how often users talk about

- slow loading of images

- import problems due to inconsistent SRO semantics

- issues with imported structure sets

- re-registering the ImageReview3DForm COM control

[which all point to a need for a better image review capability for Mosaiq users.]

WarpTPS

One of the problems to be addressed for adaptive treatment paradigms is the need to visualize and interact with deformable vector fields (DVFs). While a number of techniques exist for visualizing vector fields, such as heat maps and hedgehog plots, a simple technique is to allow interactive morphing to examine how the vector field is altering the target image to match the source.

WarpTPS Prototype

WarpTPS Prototype

This is a very old MFC program that allows loading two different PNG images, and then provides a slider to morph back and forth between them.

Note that currently the two images that can be loaded through File > Open Images... must have the same width/height, and are both required to be in .BMP format.

First is a video of Grumpy to Hedgy:

And this, slightly more clinically relevent example, shows one MR slice being morphed on to another one:

Thursday, March 26, 2015

Ectoplasmic Sonification

The pathway chart from Roche is always a fascinating visual immersion in the panoply of activities in the cytoplasm. But it is static, and visual, and while visualization is a great modality maybe it doesn't provide quite the immersive experience required of such a network. In the words of Ursula Goodenough, “Patterns of gene expression are to organisms as melodies and harmonies are to sonatas. It's all about...sets of proteins..."

So maybe, to take her words literally, what we need is a sonification of the activities in the cytoplasm, to really understand how it all is put together. Then famous DJs could remix the cytoplasm, and even the best will make it on to the dance charts; wouldn't that be groovy?

So maybe, to take her words literally, what we need is a sonification of the activities in the cytoplasm, to really understand how it all is put together. Then famous DJs could remix the cytoplasm, and even the best will make it on to the dance charts; wouldn't that be groovy?

First they came for the verbs,

and I said nothing because verbing weirds language. Then they arrival for the nouns, and I speech nothing because I no verbs. - Peter Ellis

pheonixrt has a new home!

I just recently completed the GitHub migration, due to Google Code shutting down.

https://github.com/dg1an3/pheonixrt

Three projects are represented:

https://github.com/dg1an3/pheonixrt

Three projects are represented:

- pheonixrt is the original inverse planning algorithm based on the convolutional input layer

- WarpTPS is the interactive morphing using TPSs

- ALGT is the predicate verification tools

Also, see the references at the end of http://en.wikipedia.org/wiki/Thin_plate_spline for some videos showing WarpTPS.

Sunday, August 25, 2013

How to Fold a JuliaSet

I wrote a JuliaSet animation way back when, that would use a Lissajou curve through the parameter space defining the curves and then draw / erase the resulting fractals. With color effects, it was quite fun to watch even on a 4.77 MHz PC.

So I was reminded when I saw this very nice WebGL-based animation describing how Julia Sets are generated. [Note that you really need to view this site in Chrome or Firefox to get the full effect, as it requires WebGL]. If you've only vaguely understood how the Mandelbrot set is produces, or the relationship between Mandelbrot and Julia sets, then it is worth stepping through the visuals to get a very nice description of the complex math that is used and how the iterations actually produce the fractals.

Plus the animated plot points look a lot like my old JuliaB code, but its too bad you can't interactively change the plot points.

So I was reminded when I saw this very nice WebGL-based animation describing how Julia Sets are generated. [Note that you really need to view this site in Chrome or Firefox to get the full effect, as it requires WebGL]. If you've only vaguely understood how the Mandelbrot set is produces, or the relationship between Mandelbrot and Julia sets, then it is worth stepping through the visuals to get a very nice description of the complex math that is used and how the iterations actually produce the fractals.

Plus the animated plot points look a lot like my old JuliaB code, but its too bad you can't interactively change the plot points.

Tuesday, June 04, 2013

John Henry

I would like to visit Hinton WV one of these days. There is a John Henry festival there every year, and an old train runs through the Big Bend tunnel.

The interesting thing about the legend is that it cuts through to the central question: does life have an objective function?

Subscribe to:

Posts (Atom)

-

Dicom syntax can be encoded in a songle kaitai yaml

-

One of the problems to be addressed for adaptive treatment paradigms is the need to visualize and interact with deformable vector fields (...

-

Looking at the CQRS architectural pattern, there are interactions that maybe I didn’t fully understand. Like if the target of queries/comma...